HAL

Human-centered Analytics Lab

Human-centered Analytics Lab

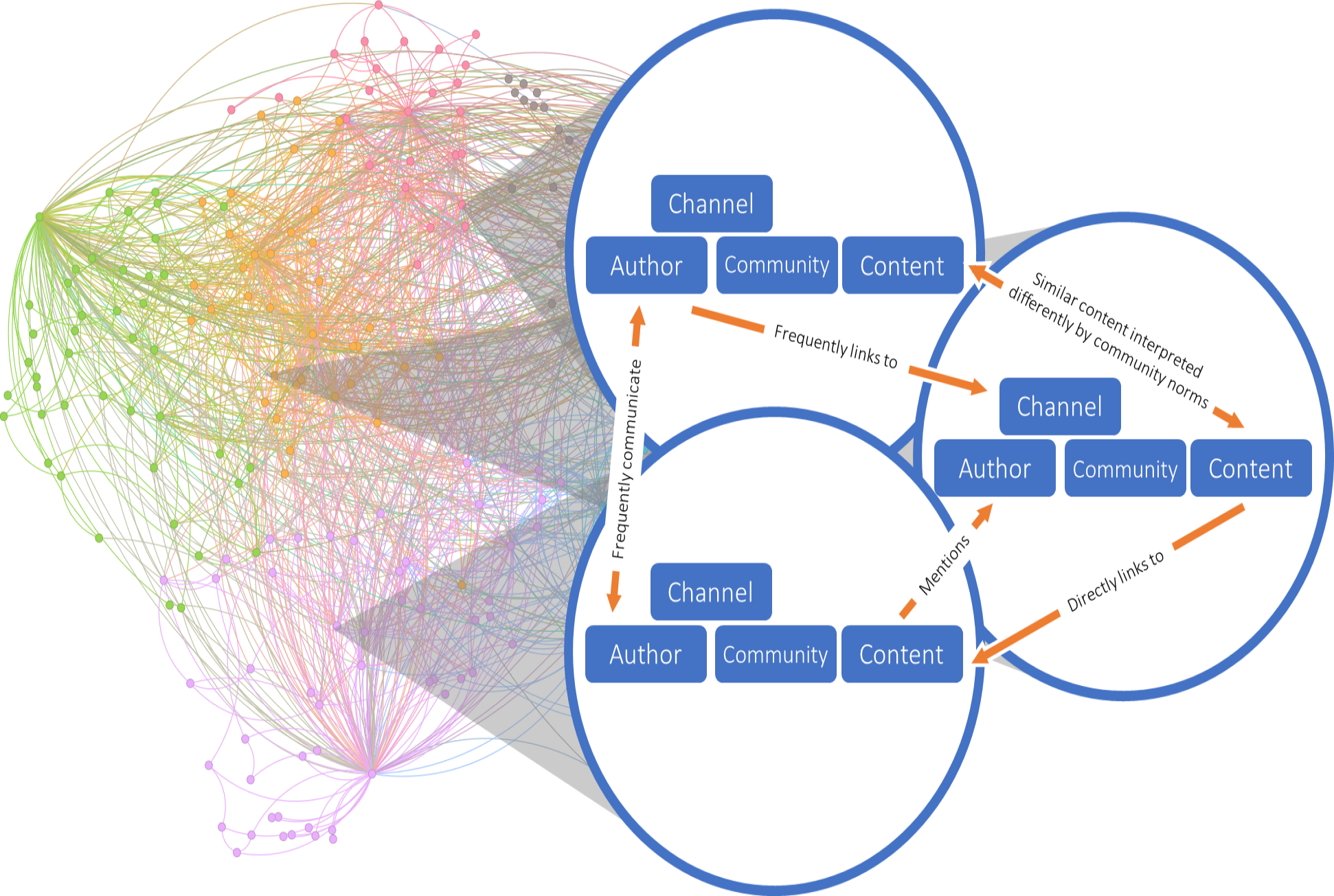

The person, technology, and the interaction of the two. This is a broad framing of a research lab that is interdisciplinary by design and pragmatic in approach. The Human-centered Analytics Lab (HAL) combines disparate skillsets and foundational disciplines to solve problems – all seeking to better understand the human condition in the context of the digital life of persons.

We regard analytics as the translational arm of more foundational areas of data science. In a lab group like no other we know of, multiple fields usually isolated from one another are merged into an interdisciplinary mash-up of technology, psychology, methodology, and business. These areas are generally in different departments that span multiple colleges or schools in a university environment. They often use different language, terms, and theoretical lenses to explore similar phenomena, which causes great confusion in many contexts. This interdisciplinary mash-up, though, is our playing field. The HAL lab sufficiently intersects these areas and has a way of looking at the world in a unique, and dare we say with a realistic, approach. We are “T-shaped” in structure: wide (horizontal) in foundational theories and deep (vertical) in methods. The HAL approach is one in which analytics have a purpose: problems are framed, critically considered, and evaluated with rigorous methods in an effort to understand the human condition. We find problems and then solutions; identify inputs and assess outputs; test stimuli on responses; identify causes and their effects; explain and predict.

Our work cuts across what would traditionally be part computer science, part statistics, part psychology. The intersection of these areas makes for research that is more interesting than most and unlikely to be done in other labs. Why? One reason is that the skillsets represented here are not usually combined for a common goal. Another reason is journal outlets in one area are generally siloed – it is difficult to navigate multiple outlets due, in part, to the language used but also the methods and framing of problems. Again, this is our playing field. We are not beholden to a rigid disciplinary framing of theory or method. Not only do we use methods, we develop methods, where our developments are done for a purpose. New questions in a changing world cannot always leverage existing methods, and thus the need to work on methods in the context of understanding the human condition.

The co-directors are both endowed chairs in analytics, but in different areas of analytics. There are relatively few chairs in analytics and we know of no other place where two senior co-directors come together in a single lab. We think that provides a special context in which to work. No one in HAL is beholden to a particular area or disciplinary boundary. Most scholars tend to publish in a particular area; we are aiming beyond disciplinary boundaries. Yet, both now bring their records established in different spaces to a common space to work together to advance our understanding of the human condition. Being based in a business school provides yet another interesting context, in that the research aims to be applied and can help understand the human condition, especially in one’s work, at work, and more generally, in the organization. In a way, an argument can be made that most endeavors can be framed in business or at least use business principles. Context matters and work is an important context of society and therefore the human condition. Thus, rather than studying behavior in a vacuum, so to speak, we look for real-world applications. That said, we value lab studies for the purity of effects they can illustrate and use those ourselves for evaluating theories and impacts. And, we believe that a lab-like study can be implemented in the real world. We regard the world as a large laboratory. The aim, though, is application and understanding.

Public Health, Policy, & Social Good

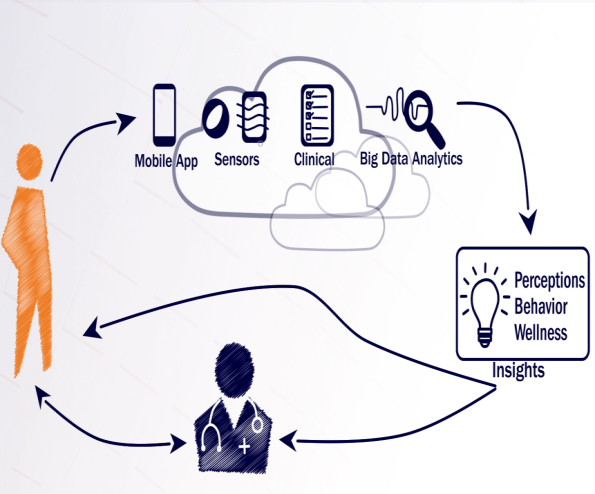

Research on public health, policy, and social good explores the impact of grand societal challenges such as the trust and credibility problem regarding online health information and news, the opioid epidemic and mental health crisis, and the proportionately small amount of research at the intersection of AI/data science and social good. Example research:

- Abbasi, A., Chiang, R., & Xu, J. (2023). Data Science for Social Good. Journal of the AIS, 24(6).

- Kitchens, B., Claggett, J. C. & Abbasi, A. (2024). Timely, Granular, and Actionable: Designing a Social Listening Platform for Public Health 3.0. MIS Quarterly, forthcoming.

- Abbasi, A., Parsons, J., Pant, G., Sheng, O., & Sarker, S. (2024). A Design Perspective on Artificial Intelligence: Charting a Pathway. Information Systems Research, forthcoming.

- Abbasi, A., Merrill, R. L., Rao, R., & Sheng, O. (2024). Preparedness and Response in the Century of Disasters: Overview of Research Frontiers, Information Systems Research, forthcoming.

- Jiang, Z., Seyedi, S., Griner, E. L., Abbasi, A., Bahrami Rad, A., Kwon, H., … & Clifford, G. D. (2024). Multimodal Mental Health Digital Biomarker Analysis from Remote Interviews using Facial, Vocal, Linguistic, and Cardiovascular Patterns. IEEE Journal of Biomedical and Health Informatics, forthcoming.

- Seyedi, S., Griner, E., Corbin, L., Jiang, Z., … Abbasi, A., Cotes, R., & Clifford, G. D. (2023). Using HIPAA–Compliant Transcription Services for Virtual Psychiatric Interviews: Pilot Comparison Study. JMIR Mental Health, 10, e48517.

- Angst, C. M., Wowak, K. D., Handley, S. M., & Kelley, K. (2017). Antecedents of Information Systems Sourcing Strategies in US Hospitals. MIS Quarterly, 41(4), 1129-1152.

Affiliated Faculty:

Psychometric NLP & AI Governance

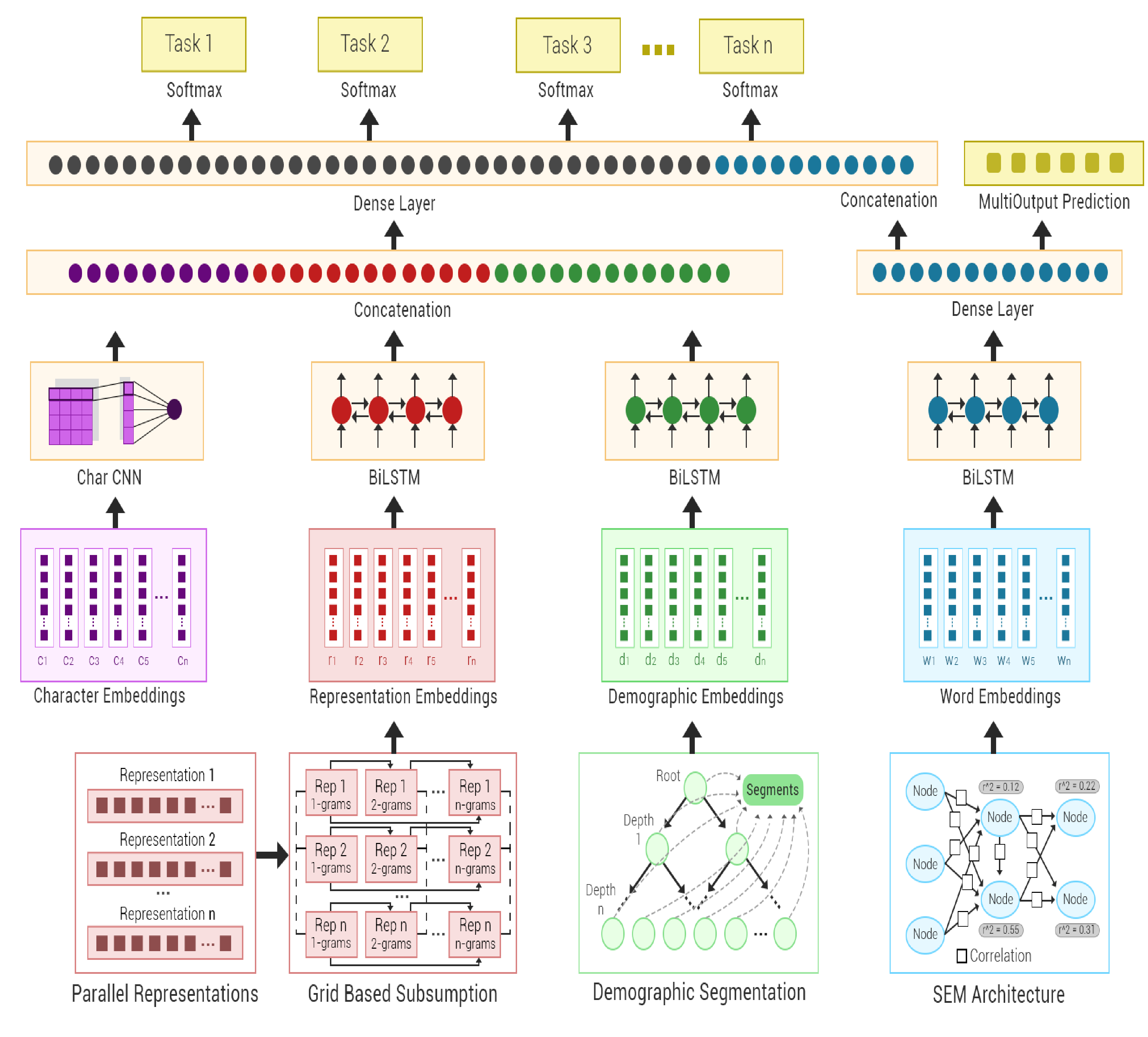

Research on psychometric NLP, fairness, AI governance includes novel machine learning methods for text classification, user-centric language modeling, and fairness in NLP. We explore ways to better understand the human condition through machine learning, with implications for downstream policies, interventions, decision-making, and AI governance. Example research:

- Lalor, J. P., Abbasi, A., Oketch, K., Yang, Y., & Forsgren, N. (2024). Should Fairness be a Metric or a Model? A Model-based Framework for Assessing Bias in Machine Learning Pipelines. ACM Transactions on Information Systems, forthcoming

- Yang, K., Lau, Raymond Y. K., & Abbasi, A. (2023). Getting Personal: A Deep Learning Artifact for Text-Based Measurement of Personality. Information Systems Research, 34(1), pp.194-222

- Guo, Y., Yang, Y., & Abbasi, A. (2022).Auto-debias: Debiasing Masked Language Models with Automated Biased Prompts. Association for Computational Linguistics, May 22-27, 1012-1023.

- Ahmad, F.,Abbasi, A., Li, J., Dobolyi, D., Netemeyer, R., Clifford, G., & Chen, H. (2020). A Deep Learning Architecture for Psychometric Natural Language Processing. ACM Transactions on Information Systems, 38(1), no. 6.

- Lalor, J. P., Wu, H., Munkhdalai, T., & Yu, H. (2018). Understanding Deep Learning Performance Through an Examination of Test Set Difficulty: A Psychometric Case Study. Empirical Methods in Natural Language Processing, Oct 31 – Nov 4, 4711-4716.

- Abbasi, A., Li, J., Clifford, G. D., & Taylor, H. A. (2018). Make ‘Fairness by Design’ Part of Machine Learning. Harvard Business Review, August 5

Affiliated Faculty and Research Scientists:

Behavior Modeling & Prediction

Research on behavior modeling & prediction includes use of statistical methods to understand the human condition and machine learning techniques for predicting behavior. Example research:

- Abbasi, A., Dobolyi, D., Vance, A., & Zahedi, F. M. (2021). The Phishing Funnel Model: A Design Artifact to Predict User Susceptibility to Phishing Websites. Information Systems Research, 32(2), pp. 410-436

- Adjerid, I. & Kelley, K. (2018). Big Data in Psychology: A Framework for Research Advancement. American Psychologist, 73(7), 899-917

- Glavas, A. & Kelley, K. (2014). The Effects of Perceived Corporate Social Responsibility on Employee Attitudes. Business Ethics Quarterly, 24(2), 165-202

- Abbasi, A., Lau, R. Y. K., & Brown, D. E. (2015). Predicting Behavior. IEEE Intelligent Systems, 30(3), 35-43

Affiliated Faculty:

Designing for Digital Experimentation

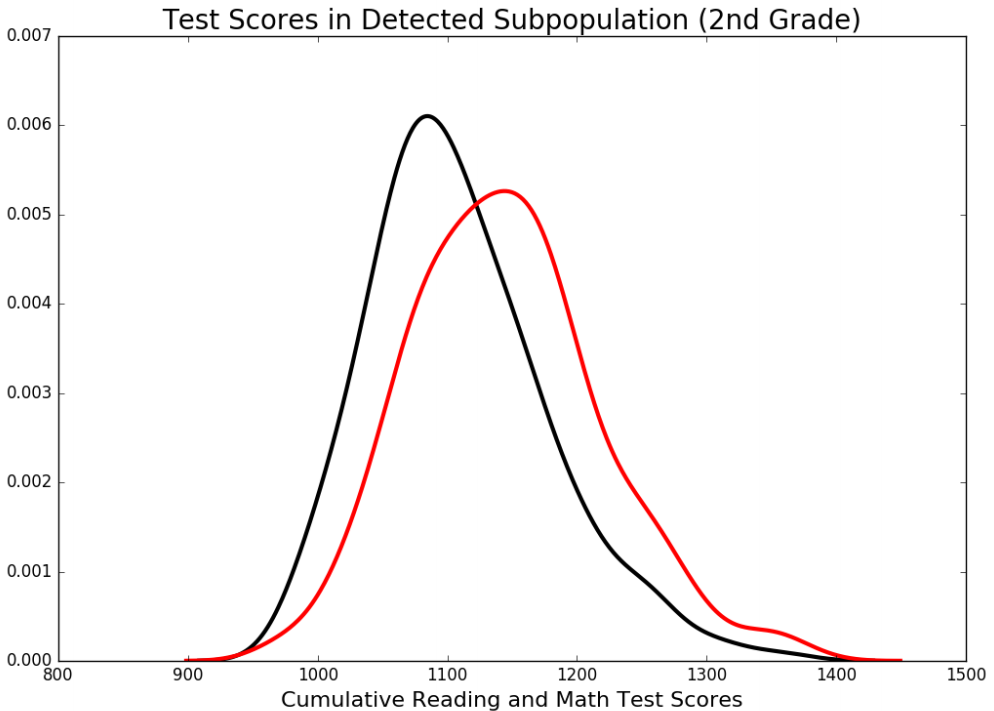

Digital experiments and randomized control trials are crucial for understanding the potential effectiveness and implications of decisions and policies. Experiment design research explores statistical and machine learning strategies and methods for sampling, effect sizes, and parameter estimation. More recently, adapting such strategies to digital experimentation settings has become important for understanding heterogenous treatment effects, bias in estimation, as well as statistical and practical significance.

- Somanchi, S., Abbasi, A., & Kelley, K., Dobolyi, D., & Yuan, T. T. (2023). Examining User Heterogeneity in Digital Experiments. ACM Transactions on Information Systems, 41(4), 1-34.

- Anderson, S. F., & Kelley, K. (2022). Sample Size Planning for Replication Studies: The Devil is in the Design. Psychological Methods, forthcoming.

- Somanchi, S., Abbasi, A., Dobolyi, D., Kelley, K., & Yuan, T. T. (2021). User and Session Heterogeneity in Digital Experiments: A Framework for Analysis and Understanding. MIT Conference on Digital Experimentation, November 4-5, 2021

- Kelley, K., Darku, F. B., & Chattopadhyay, B. (2019). Sequential Accuracy in Parameter Estimation for Population Correlation Coefficients. Psychological Methods, 24(4), 492-515

- Kelley, K., Darku, F. B., & Chattopadhyay, B. (2018). Accuracy in Parameter Estimation for a General Class of Effect Sizes: A Sequential Approach. Psychological Methods, 23(2), 226-243

- McFowland III, E.Somanchi, S., & Neill, D. B. (2018). Efficient Discovery of Heterogeneous Treatment Effects in Randomized Experiments Via Anomalous Pattern Detection. arXiv, forthcoming, https://arxiv.org/pdf/1803.09159.pdf

- Kelley, K., & Preacher, K. J. (2012). On Effect Size. Psychological Methods, 17(2), 137-152

- Preacher, K. J. & Kelley, K., (2011). Effect Size Measures for Mediation Models: Quantitative Strategies for Communicating Indirect Effects. Psychological Methods, 16(2), 93-115

Affiliated Faculty:

Forecasting Adverse Events

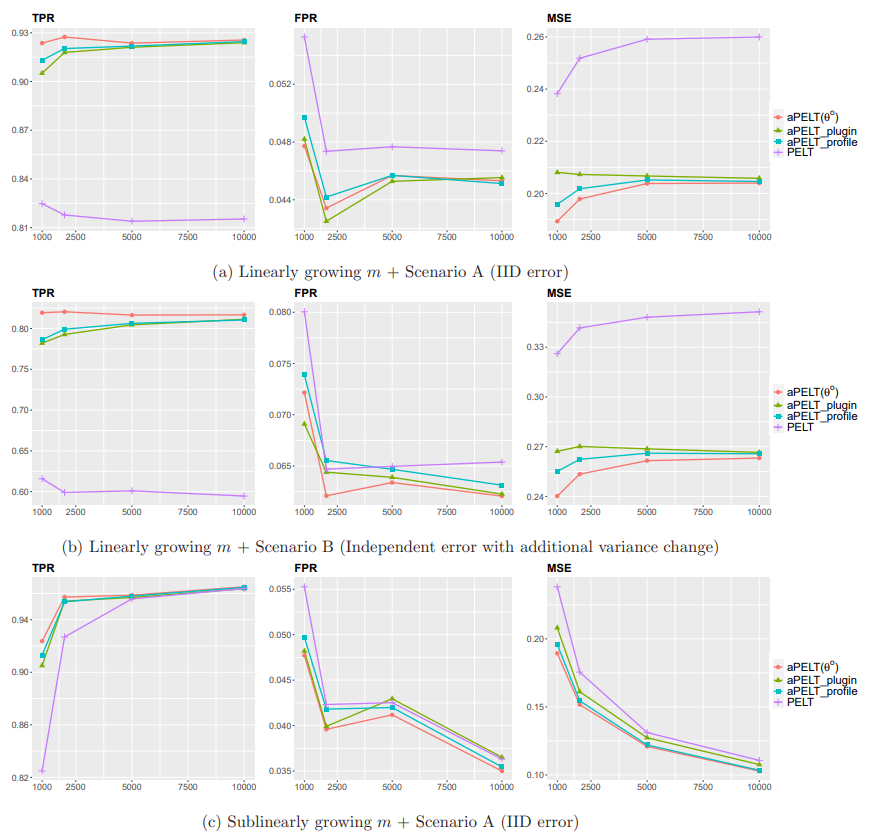

We are exploring statistical and machine learning methods for forecasting events with significant societal implications using time series data.

- Zhao, Z. & Yau, Y. C. (2021). Alternating Pruned Dynamic Programming for Multiple Epidemic Change-point Estimation. Journal of Computational and Graphical Statistics, forthcoming, https://arxiv.org/abs/1907.06810

- Jiang, F., Zhao, Z., & Shao, X. (2021). Time Series Analysis of COVID-19 Infection Curve: A Change-point Perspective. Journal of Econometrics, forthcoming.

- Ahmad, F., Abbasi, A., Kitchens, B., Adjeroh, D. A., & Zeng, D. (2022). Deep Learning for Adverse Event Detection from Web Search. IEEE Transactions on Knowledge and Data Engineering, 34(6),2681-2695.

- Abbasi, A., Li, J., Adjeroh, D. A., Abate, M., & Zheng, W. (2019). Don’t Mention It? Analyzing User-Generated Content Signals for Early Adverse Event Warnings. Information Systems Research, 30(3), 1007-1028.

- Speakman, S., Somanchi, S., McFowland III, E. & Neill, D. B. (2016). Penalized Fast Subset Scanning. Journal of Computational and Graphical Statistics, 25(2),382-404.

Affiliated Faculty: