Watching a train wreck

ND expert promotes balance of moderation and engagement in technology ethics

Published: February 13, 2023 / Author: Notre Dame Stories

While social media companies court criticism with who they choose to ban, tech ethics experts say the more important function these companies control happens behind the scenes in what they recommend.

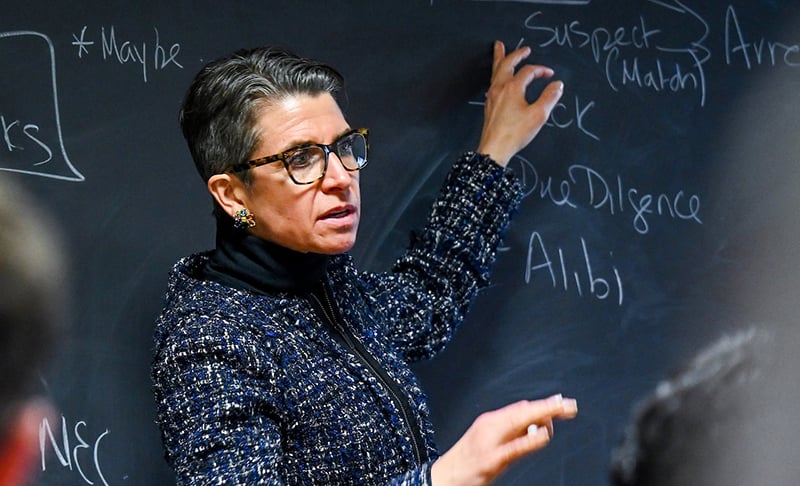

Kirsten Martin, director of the Notre Dame Technology Ethics Center (ND TEC), argues that optimizing recommendations based on a single factor — engagement — is an inherently value-laden decision.

Human nature may be fascinated by and drawn to the most polarizing content — we can’t look away from a train wreck. But there are still limits. Social media platforms like Facebook and Twitter constantly struggle to find the right balance between free speech and moderation, she says.

Kirsten Martin

“There is a point where people leave the platform,” Martin says. “Totally unmoderated content, where you can say as awful material as you want, there’s a reason why people don’t flock to it. Because while it seems like a train wreck when we see it, we don’t want to be inundated with it all the time. I think there is a natural pushback.”

Elon Musk’s recent changes at Twitter have transformed this debate from an academic exercise into a real-time test case. Musk may have thought the question of whether to ban Donald Trump was central, Martin says. A single executive can decide a ban, but choosing what to recommend takes technology like algorithms and artificial intelligence — and people to design and run it.

“The thing that’s different right now with Twitter is getting rid of all the people that actually did that,” Martin says. “The content moderation algorithm is only as good as the people that labeled it. If you change the people that are making those decisions or if you get rid of them, then your content moderation algorithm is going to go stale, and fairly quickly.”

Martin, an expert in privacy, technology and business ethics and the William P. and Hazel B. White Center Professor of Technology Ethics in the Mendoza College of Business, has closely analyzed content promotion. Wary of criticism over online misinformation before the 2016 presidential election, she says, social media companies put up new guardrails on what content and groups to recommend in the runup to the 2020 election.

Facebook and Twitter were consciously proactive in content moderation but stopped after the polls closed. Martin says Facebook “thought the election was over” and knew its algorithms were recommending hate groups but didn’t stop because “that type of material got so much engagement.” With more than 1 billion users, the impact was profound.

Martin wrote an article about this topic in a case study textbook (“Ethics of Data and Analytics”) she edited, published in 2022. In “Recommending an Insurrection: Facebook and Recommendation Algorithms,” she argues that Facebook made conscious decisions to prioritize engagement because that was their chosen metric for success.

“While the takedown of a single account may make headlines, the subtle promotion and recommendation of content drove user engagement,” she wrote. “And, as Facebook and other platforms found out, user engagement did not always correspond with the best content.” Facebook’s own self-analysis found that its technology led to misinformation and radicalization. In April 2021, an internal report at Facebook found that “Facebook failed to stop an influential movement from using its platform to delegitimize the election, encourage violence, and help incite the Capitol riot.”

“While the takedown of a single account may make headlines, the subtle promotion and recommendation of content drove user engagement,” she wrote. “And, as Facebook and other platforms found out, user engagement did not always correspond with the best content.” Facebook’s own self-analysis found that its technology led to misinformation and radicalization. In April 2021, an internal report at Facebook found that “Facebook failed to stop an influential movement from using its platform to delegitimize the election, encourage violence, and help incite the Capitol riot.”

A central question is whether the problem is the fault of the platform or platform users. Martin says this debate within the philosophy of technology resembles the conflict over guns, where some people blame the guns and others the people who use them for harm. “Either the technology is a neutral blank slate, or on the other end of the spectrum, technology determines everything and almost evolves on its own,” she says. “Either way, the company that’s either shepherding this deterministic technology or blaming it on the users, the company that actually designs it has actually no responsibility whatsoever.

“That’s what I mean by companies hiding behind this, almost saying, ‘Both the process by which the decisions are made and also the decision itself are so black boxed or very neutral that I’m not responsible for any of its design or outcome.’” Martin rejects both claims.

An example that illustrates her conviction is Facebook’s promotion of super users, people who post material constantly. The company amplified super users because that drove engagement, even if these users tended to include more hate speech. Think Russian troll farms. Computer engineers discovered this trend and proposed solving it by tweaking the algorithm. Leaked documents have shown that the company’s policy shop overruled the engineers because they feared a hit on engagement. Also, they feared being accused of political bias because far-right groups were often super users.

Another example in Martin’s textbook features an Amazon driver fired after four years of delivering packages around Phoenix. He received an automated email because the algorithms tracking his performance “decided he wasn’t doing his job properly.”

The company was aware that delegating the firing decision to machines could lead to mistakes and damaging headlines, “but decided it was cheaper to trust the algorithms than to pay people to investigate mistaken firings so long as the drivers could be replaced easily.” Martin instead argues that acknowledging the “value-laden biases of technology” is necessary to preserve the ability of humans to control the design, development and deployment of that technology.

Originally posted on ND Stories.

Related Stories